Emerging Technologies

Circuits

Beyond Crossbar Deep Learning using Gated-Synapses: Memristors are among the most promising emerging technologies for deep neural networks (DNNs). With multibit precision and nonvolatile resistive programming, memristors can store DNN’s synaptic weights in a dense and scalable crossbar. While a significant advantage of the memristor crossbar is their scalability via two-electrode arrays, this same architecture imposes challenges when adapting their use for emerging DNN layers. Architectures of DNNs have gone through a remarkable revolution in the last few years. Novel layers such as inception, residual layers, dynamic gating, polynomial layers, self-attention, and Hypernetworks were recently added to the repository of DNN building blocks. Unlike classical layers, the emerging deep learning layers operate with a higher-order, simultaneously correlating multiple variables to enhance computational efficiency and representation capacity. For example, Hypernetworks integrate the application context by simultaneously associating all three, viz., inputs, weights, and context features, to predict. However, with only two controlling electrodes, memristor crossbars cannot natively support such emerging DNN layers.

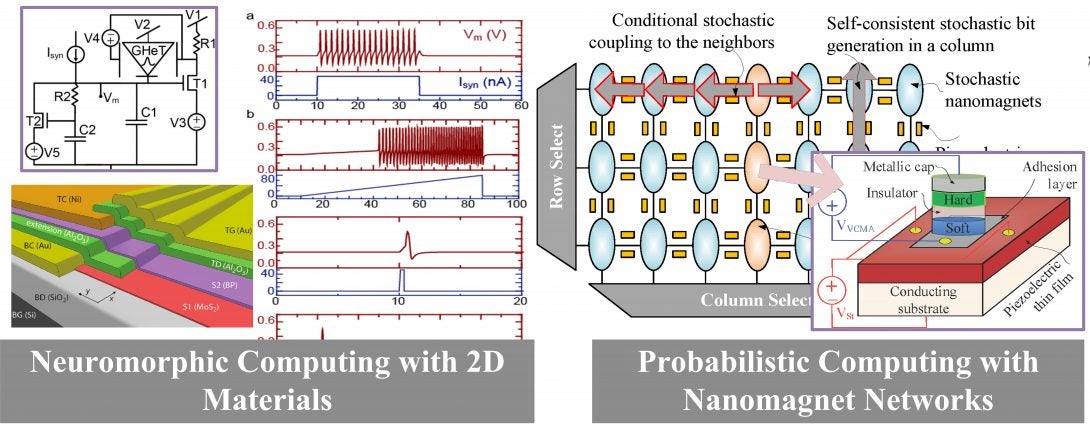

Overcoming this critical limitation, my lab has presented beyond-crossbar deep learning architectures using a novel gated-memristive device, i.e., a memtransistor. Unlike a typical crossbar which can only operate input-weight products in one dimension at a time, our architecture can process products in multiple dimensions in parallel. Therefore, higher-order multiplicative interactions in emerging DNN layers such as self-attention and hyper networks are effortlessly performed by our system. Even more, we have shown that gate controls of the novel device, memtransistor, can also be used for on-chip continuous learning.

Probabilistic Spin Graphs: Probabilistic graphs enable reasoning under uncertainty. The computation of posterior and marginal probabilities is at the cornerstone of any probabilistic graph. The exact calculation of these probabilities is known to be intractable, however. Therefore, approximate inference methods, especially stochastic simulation-based processes, are prevalent for graph processing.

To accelerate graph processing, we have shown probabilistic spin graphs. Probabilistic spin graphs operate using a novel straintronic magnetic tunneling junction (sMTJ). sMTJ switches via voltage-control magnetic anisotropy. The networks of sMTJs possess two critical properties for graph processing. Their switching probability can be controlled by the gate voltage. And, two or more sMTJs can be coupled with a programmable correlation coefficients to encode conditional probabilities. Moreover, the networks of straitronic MTJs switch in a pico-second time-frame for extremely high throughput and scalable inference. National Science Foundations (NSF) currently funds this research.

Key Publications:

- Shamma Nasrin Justine Drobitch, Priyesh Shukla, Theja Tulabandhula, Supriyo Bandyopadhyay, and Amit Ranjan Trivedi, “Bayesian reasoning machine on a magneto-tunneling junction network,” Nanotechnology, 2020.

- Megan E. Beck, Ahish Shylendra, Vinod K. Sangwan, Silu Guo, William A. Gaviria Rojas, Hocheon Yoo, Hadallia Bergeron, Katherine Su, Amit R. Trivedi, and Mark C. Hersam. “Spiking neurons from tunable Gaussian heterojunction transistors.” Nature communications, 2020.

- Shamma Nasrin, Justine L. Drobitch, Supriyo Bandyopadhyay, and Amit Ranjan Trivedi. “Low power restricted Boltzmann machine using mixed-mode magneto-tunneling junctions.” IEEE Electron Device Letters, 2019.

- Shamma Nasrin, Justine L. Drobitch, Supriyo Bandyopadhyay, and Amit Ranjan Trivedi. “Mixed-mode Magnetic Tunnel Junction-based Deep Belief Network.” IEEE International Conference on Nanotechnology (IEEE-NANO), 2019.