AI Circuits and Systems

Circuits

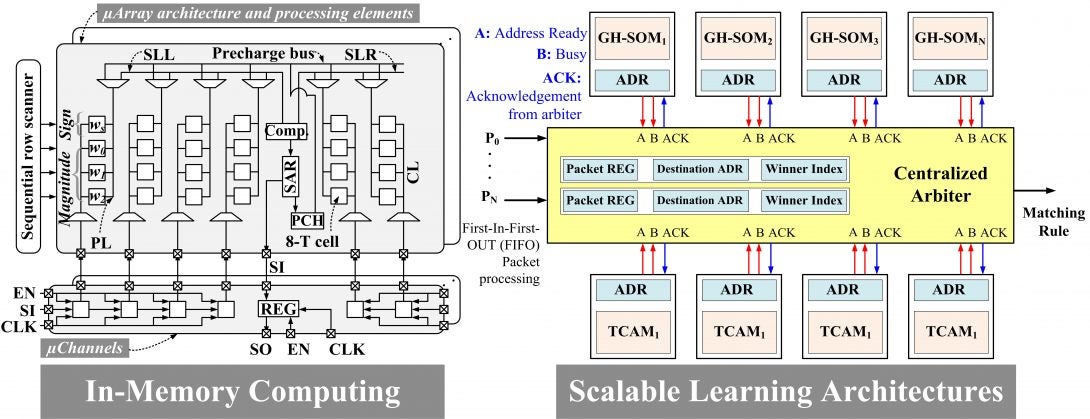

In recent years, compute-in-memory has emerged as a key approach to maximize the energy efficiency of deep learning. In this approach, memory modules are designed so that the same physical structure is used for both DNN model storage and the majority of computations within memory units. Even so, current compute-in-memory approaches rely on excessive mixed-signal operations, such as digital to analog converters (DAC) at each input port, which eclipses the energy benefits of the system and reduces area efficiency.

In prior works, we came up with a unique approach for compute-in-memory deep learning by co-optimizing learning operators against the physical and operational constraints of in-memory execution. Our explorations led to a so-called multiplication-free operator which eliminated the need for DACs in compute-in-memory for multi-bit precision DNNs that typically plague the efficiency of competitive approaches. Furthermore, we proposed the design of a memory-embedded analog-to-digital converter (ADC), which obviated overheads of a dedicated ADC by using the memory array itself for data domain conversion. Compared to state-of-the-art approaches, our methods led to 15x improvements in Tera operations per second per Watt (TOPS/W), a key metric for deep learning accelerators. Intel currently funds this research.

Key Publications:

- Shamma Nasrin, Srikanth Ramakrishna, Theja Tulabandhula, and Amit Ranjan Trivedi. “Supported-BinaryNet: Bitcell Array-Based Weight Supports for Dynamic Accuracy-Energy Trade-Offs in SRAM-Based Binarized Neural Network.” In IEEE International Symposium on Circuits and Systems (ISCAS), 2020.

- Nick Iliev and Amit Ranjan Trivedi. “Low-Power Sensor Localization in Three-Dimensional Using a Recurrent Neural Network.” IEEE Sensors Letters, 2019.

- Nick Iliev, Alberto Gianelli, and Amit Ranjan Trivedi. “Low Power Speaker Identification by Integrated Clustering and Gaussian Mixture Model Scoring.” IEEE Embedded Systems Letters, 2019.

- Alberto Gianelli, Nick Iliev, Shamma Nasrin, Mariagrazia Graziano, and Amit Ranjan Trivedi. “Low power speaker identification using look up-free Gaussian mixture model in CMOS.” IEEE Symposium in Low-Power and High-Speed Chips (COOL CHIPS), 2019.

- Shih-Chang Hung, Nick Iliev, Balajee Vamanan and Amit Ranjan Trivedi, “Self-Organizing Maps-based Flexible and High-Speed Packet Classification in Software Defined Networking,” IEEE International Conference on VLSI Design (VLSID), 2019.